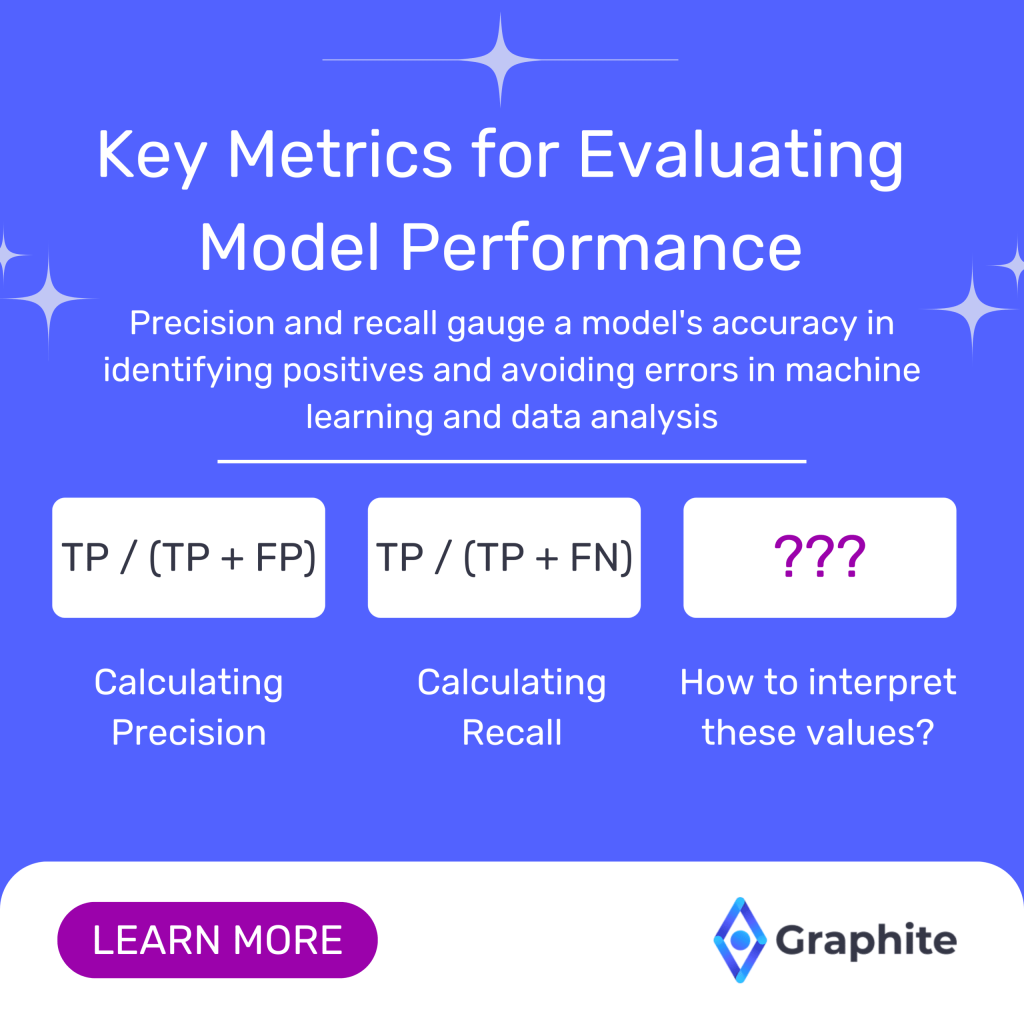

As a data scientist or machine learning enthusiast, you’ve probably come across the terms “precision” and “recall” multiple times in your journey. These two metrics play a crucial role in evaluating the performance of machine learning models. In this article, we will dive deep into the world of precision and recall, unraveling their meanings, exploring their importance, learning how to calculate them, and understanding their trade-off.

Defining Precision and Recall

Precision and recall are two important metrics used to evaluate the performance of a model in machine learning and data analysis. They provide insights into how well the model is able to correctly identify positive samples and avoid false positives and false negatives.

Precision: A Closer Look

Precision, sometimes referred to as positive predictive value, measures the accuracy of a model in correctly identifying positive samples. It answers the question, “Of all the positive predictions made by the model, how many were actually correct?”

Precision is commonly used in scenarios where the cost of false positives is relatively high. For example, in medical diagnosis, incorrectly diagnosing a healthy individual as diseased can lead to unnecessary treatments and psychological distress.

Mathematically, precision can be calculated as:

Precision = True Positives / (True Positives + False Positives)

Let’s consider an example to illustrate the concept of precision. Imagine a model that predicts whether a student will pass or fail an exam based on their study hours. The model predicts that 50 students will pass, out of which 40 actually pass and 10 fail. In this case, the true positives are 40 (students correctly predicted to pass) and the false positives are 10 (students incorrectly predicted to pass). Therefore, the precision of the model would be 40 / (40 + 10) = 0.8 or 80%.

Recall: An In-depth Understanding

Recall, also known as sensitivity or true positive rate, measures the ability of a model to correctly identify all positive samples. It answers the question, “Of all the actual positive samples in the dataset, how many did the model identify?”

Recall is particularly valuable in scenarios where the cost of false negatives is high. For instance, in email spam detection, classifying an important email as spam and moving it to the junk folder could have serious consequences.

To calculate recall, we use the following formula:

Recall = True Positives / (True Positives + False Negatives)

Continuing with the previous example, let’s assume that out of 100 students, 60 actually pass the exam and 40 fail. The model correctly identifies 40 students who pass (true positives) but fails to identify 20 students who pass (false negatives). In this case, the recall of the model would be 40 / (40 + 20) = 0.67 or 67%.

Both precision and recall are important metrics in evaluating the performance of a model. Depending on the specific problem and its associated costs, one metric may be more important than the other. It is essential to strike a balance between precision and recall to achieve the desired outcome.

The Importance of Precision and Recall in Model Evaluation

Understanding the significance of precision and recall in model evaluation is crucial for building effective and reliable machine learning models. These metrics provide insights into how well a model performs on different aspects and help in fine-tuning the model for optimal results.

Balancing Precision and Recall

Precision and recall are interconnected metrics with a trade-off. Often, improving precision results in a decline in recall and vice versa. Achieving the right balance between the two metrics depends on the specific problem at hand and its associated risks and costs.

For instance, in a fraud detection system, we may prioritize recall to capture as many fraud cases as possible, even if it means sacrificing precision by classifying some legitimate transactions as fraudulent. On the other hand, in sentiment analysis, precision might be more critical as misclassifying positive sentiment as negative can lead to incorrect analysis and insights.

Impact on Model Performance

Precision and recall provide valuable insights into the performance of a model. A high precision score indicates that the model has a low false positive rate and makes accurate positive predictions. On the other hand, a high recall score suggests that the model effectively identifies most of the positive samples.

By examining both metrics together, we gain a comprehensive understanding of the model’s strengths and weaknesses. For instance, a high precision and low recall may indicate cautiousness in making positive predictions, while a low precision and high recall may imply over-enthusiasm in classifying positive samples.

Calculating Precision and Recall

The Formula for Precision

To calculate precision, we divide the number of true positives (TP) by the sum of true positives and false positives (FP). In other words:

Precision = TP / (TP + FP)

The Formula for Recall

Recall can be calculated by dividing the number of true positives (TP) by the sum of true positives and false negatives (FN). In equation form:

Recall = TP / (TP + FN)

Interpreting Precision and Recall Values

What High Precision Indicates

A high precision value suggests that the model has few false positives. It means that when the model predicts a sample as positive, it is highly likely to be correct. This is particularly important in scenarios where the cost of false positives is significant.

What High Recall Indicates

On the other hand, a high recall value indicates that the model identifies a high proportion of positive samples. It means the model is effective in capturing the positive cases. High recall is crucial in scenarios where the cost of false negatives is high.

Both precision and recall are essential metrics in different contexts and should be considered together to understand the overall performance of a model.

Precision-Recall Trade-off

Understanding the Trade-off

The precision-recall trade-off refers to the inverse relationship between precision and recall. Improving one metric often leads to a decline in the other. This trade-off arises from the classification threshold used to determine positive and negative predictions.

When the classification threshold is set to be more conservative, more predictions are classified as negative, resulting in higher precision as false positives decrease. However, this conservative threshold may lead to a decrease in recall as some true positives may be classified as negatives.

Conversely, when the threshold is relaxed, more predictions are classified as positive, increasing recall. However, this relaxation may introduce more false positives, reducing precision.

Managing the Trade-off for Optimal Performance

Managing the precision-recall trade-off is crucial in achieving the optimal performance of a model. The choice between precision and recall depends on the problem context and its associated risks and costs.

To strike the right balance, consider the specific requirements of your application. Are false positives or false negatives more damaging? Understanding the impact of misclassifications on various stakeholders can guide you in making an informed decision.

Beyond the trade-off, other factors like data quality, feature engineering, and model selection also contribute to the performance of a machine learning model. Continuous experimentation, incorporation of feedback, and iterative improvement are key in building models with high precision, recall, and overall efficacy.

In Conclusion

Precision and recall are invaluable metrics for evaluating model performance in machine learning. Understanding their definitions, trade-off, and calculation methods equips us with the tools to assess and optimize our models. By striking the right balance between precision and recall and considering the problem domain, we can build robust models that deliver the desired outcomes. So, embrace the power of precision and recall, and unlock the true potential of your machine learning endeavors.

Ready to elevate your business outcomes with the power of precision and recall in predictive analytics? Graphite Note is your go-to platform for no-code predictive analytics, designed for growth-focused teams and agencies without a data science background. Our intuitive platform empowers you to transform data into actionable insights and precise business strategies with just a few clicks. Whether you’re a data analyst or a domain expert, Graphite Note streamlines the process of building, visualizing, and explaining machine learning models for real-world applications. Don’t miss the opportunity to harness the full potential of your data. Request a Demo today and unlock the power of decision science with Graphite Note.