Random Forest is a powerful algorithm that has gained popularity in the field of machine learning. It is widely used for classification and regression tasks because of its versatility and accuracy. If you are new to the concept of Random Forest or want to enhance your existing knowledge, this comprehensive guide will take you through its inner workings, advantages, disadvantages, practical applications, and optimization techniques. By the end of this article, you’ll have a solid understanding of how Random Forest works and how it can be applied to various domains.

Understanding the Concept of Random Forest

Random Forest is an ensemble learning method that combines multiple decision trees to make predictions. Each decision tree in the forest is trained on a random subset of the training data, and the final prediction is made by aggregating the predictions of all the individual trees. This ensemble approach improves the accuracy and generalization capability of the algorithm.

The Basics of Random Forest

Random Forest is a powerful machine learning algorithm that has gained popularity due to its ability to handle complex problems and provide accurate predictions. It is based on the concept of ensemble learning, where multiple models are combined to make a final prediction.

The basic idea behind Random Forest is to create a forest of decision trees, where each tree is trained on a random subset of the training data. This process is known as bootstrapping, and it introduces diversity into the forest. By training each tree on a different subset of data, Random Forest reduces the risk of overfitting and improves the generalization capability of the algorithm.

Once the trees are trained, the final prediction is made by aggregating the predictions of all the individual trees. This aggregation can be done by taking the majority vote (for classification problems) or by averaging the predictions (for regression problems). The final prediction is usually more accurate and robust compared to the prediction of a single decision tree.

Key Terminology in Random Forest

Before diving deeper into Random Forest, it’s essential to familiarize yourself with some key terms:

- Decision Trees: These are the building blocks of Random Forest. Each decision tree follows a hierarchical structure of nodes and branches to make predictions. In a decision tree, each internal node represents a feature or attribute, and each leaf node represents a class or a value.

- Bootstrapping: It is a technique used in Random Forest where each tree is trained on a randomly sampled subset of the training data. This process introduces diversity and reduces overfitting. By training each tree on a different subset of data, Random Forest ensures that the individual trees are not overly influenced by any particular subset of data.

- Feature Selection: Random Forest employs feature selection by considering only a random subset of features at each split. This approach enhances the robustness of the algorithm and prevents it from being dominated by a single feature. By randomly selecting a subset of features at each split, Random Forest ensures that the individual trees are not overly dependent on any particular feature.

- Ensemble Learning: Random Forest is an example of ensemble learning, where multiple models are combined to make a final prediction. The idea behind ensemble learning is that by combining the predictions of multiple models, the final prediction is usually more accurate and robust compared to the prediction of a single model.

- Generalization: Random Forest is designed to improve the generalization capability of the algorithm. Generalization refers to the ability of a model to perform well on unseen data. By training each tree on a different subset of data and aggregating their predictions, Random Forest reduces the risk of overfitting and improves the ability of the algorithm to make accurate predictions on new data.

By understanding these key terms, you will have a solid foundation to explore and analyze Random Forest in more detail. Random Forest is a versatile algorithm that can be applied to a wide range of problems, including classification, regression, and feature selection. Its ability to handle complex problems and provide accurate predictions makes it a popular choice among data scientists and machine learning practitioners.

The Inner Workings of Random Forest

Decision Trees: The Building Blocks

Decision trees are a fundamental component of Random Forest. Each decision tree is constructed by recursively splitting the data based on a selected feature and its corresponding threshold. The objective is to create branches that separate the data points into homogeneous groups, resulting in accurate predictions.

Every decision tree brings its own set of unique splits, creating diversity and minimizing the correlation among the trees. This diversity is what contributes to Random Forest’s ability to handle complex problems and reduce overfitting.

The Role of Bootstrapping in Random Forest

Bootstrapping plays a crucial role in Random Forest. It involves randomly sampling the training data with replacement to create multiple subsets for training individual decision trees. Each subset is of the same size as the original dataset but contains different instances.

By training the decision trees on different subsets, Random Forest captures various patterns within the dataset and reduces the risk of making predictions based on outliers or noisy samples. Bootstrapping also helps in estimating the uncertainty associated with the predictions.

Feature Selection in Random Forest

Feature selection is another key aspect of Random Forest. At each split in a decision tree, only a random subset of features is considered to find the best split. This approach prevents the algorithm from relying too heavily on any particular feature, leading to more robust and accurate predictions. It also enables handling high-dimensional datasets effectively.

Random Forest can handle both categorical and continuous features. For categorical features, it considers all possible splits, whereas, for continuous features, it evaluates different threshold values to find the optimal split.

Advantages and Disadvantages of Random Forest

Strengths of Using Random Forest

Random Forest offers several advantages that make it a popular choice among practitioners:

- High Accuracy: Random Forest generally provides high prediction accuracy, even with complex datasets, by leveraging the collective knowledge of multiple decision trees.

- Robustness: The ensemble nature of Random Forest makes it robust against outliers, missing values, and noisy data.

- Feature Importance: Random Forest can assess the importance of different features, allowing you to identify the most influential ones for prediction.

- Efficiency: Despite its complexity, Random Forest can efficiently handle large datasets and perform well in real-time scenarios.

Potential Drawbacks of Random Forest

While Random Forest comes with many benefits, it also has a few limitations to consider:

- Interpretability: Random Forest models are not as interpretable as individual decision trees due to their ensemble nature.

- Computational Complexity: Building and training random forest models can be computationally expensive, particularly with a high number of decision trees and features.

- Hyperparameter Tuning: Random Forest has several hyperparameters that require careful tuning to achieve optimal performance, which can be time-consuming.

- Overfitting: While Random Forest reduces overfitting compared to individual decision trees, it can still be prone to overfitting if the model complexity is not properly regulated.

Practical Applications of Random Forest

Random Forest in Predictive Analytics

Random Forest is widely used in predictive analytics for various domains, including healthcare, marketing, and fraud detection. Its ability to handle large and complex datasets, along with its high prediction accuracy, makes it a valuable tool for making informed predictions and identifying patterns.

For example, in healthcare, Random Forest can be used to predict disease outcomes, identify potential risk factors, and assist in the diagnosis of medical conditions.

Use of Random Forest in Bioinformatics

In bioinformatics, Random Forest has shown great success in analyzing biological data. It can be used for tasks such as protein structure prediction, gene expression analysis, and identifying disease biomarkers.

Random Forest’s ability to handle high-dimensional data and feature selection makes it an excellent choice for extracting meaningful insights from biological datasets.

Random Forest in Financial Modeling

Random Forest is also widely employed in financial modeling and risk assessment. It can help in predicting stock prices, evaluating credit risk, detecting fraudulent transactions, and optimizing investment portfolios.

By utilizing the ensemble of decision trees and capturing complex relationships among financial variables, Random Forest offers valuable insights for making informed financial decisions.

Optimizing Random Forest Performance

Parameter Tuning for Better Results

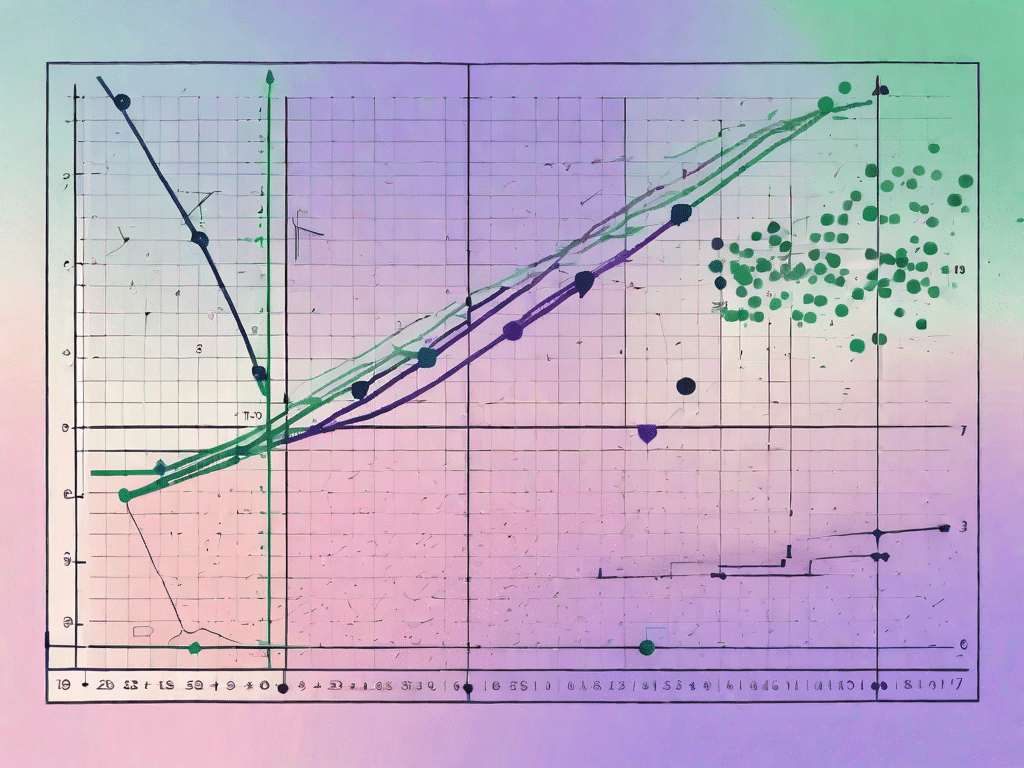

Random Forest has several hyperparameters that can be fine-tuned to improve its performance. Parameters like the number of decision trees, tree depth, and the number of features considered at each split can significantly impact the accuracy and efficiency of the model.

It is crucial to strike a balance between model complexity and generalization capability. Grid search and cross-validation techniques can be used to find the optimal set of hyperparameters for the given dataset.

Overfitting and Underfitting: Balancing Bias and Variance

Random Forest is designed to reduce overfitting compared to individual decision trees. However, it is still important to be aware of the bias-variance trade-off.

If the Random Forest model is too complex, it may overfit the training data, resulting in poor performance on unseen data. On the other hand, if the model is too simple, it may underfit the data, failing to capture the underlying patterns.

Regularization techniques like limiting tree depth, increasing the minimum number of samples required to split, and adjusting the maximum number of features considered can help strike the right balance between bias and variance.

In conclusion, Random Forest is a powerful and versatile algorithm that can be applied to a wide range of data analysis and prediction tasks. By understanding its inner workings, advantages, disadvantages, practical applications, and optimization techniques, you can harness its full potential to make accurate predictions and gain meaningful insights from your data.

Whether you’re a beginner or an experienced practitioner, exploring and mastering Random Forest will undoubtedly enhance your machine learning skills and expand your problem-solving capabilities.

Ready to leverage the power of Random Forest for your business without the complexity of coding? Graphite Note simplifies predictive analytics, transforming your data into actionable insights with just a few clicks. Whether you’re a growth-focused team, an agency without a data science team, or a data analyst looking to harness AI, Graphite Note’s no-code platform is your gateway to precision-driven business outcomes. Don’t miss the opportunity to turn data into decisive action plans. Request a Demo today and unlock the full potential of decision science for your organization.