Data Cleaning for Machine Learning

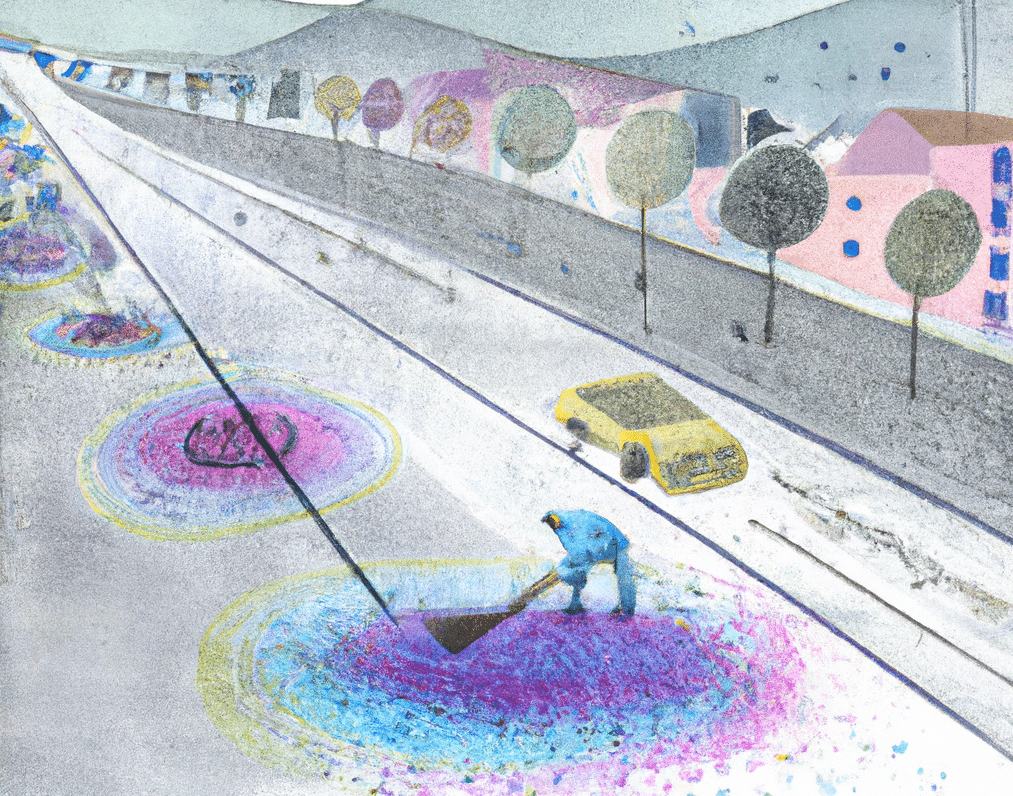

Data cleaning is a vital, yet often, overlooked step in the machine learning process. Data cleaning involves manually or automatically examining, transforming, and normalizing raw data so that it is formatted correctly for use with an AI model. Proper data cleaning techniques ensure that machine learning algorithms are unhindered by data problems. such as missing values, outliers, and duplication. Your data cleaning techniques can have an effect on your machine learning model output. To ensure your model produces accurate results, comprehensive and effective data cleaning tools and techniques must be used to ensure accuracy.

Introduction to Machine Learning

According to Statista, global investment and trend analysis show that spending on AI technologies is expected to increase annually by more than 17 percent over the next decade, with spending forecasted at $95 billion in 2024.Spending by governments and companies worldwide on AI technology topped $500 billion in 2023, according to IDC research. This shift can be attributed to organizations striving for more accurate decision-making based on actionable inputs derived from AI and predictive analytics. Machine learning involves using algorithms to enable computer systems to learn from data and make predictions or decisions without explicit programming. The machine learning algorithm learning process involves training data. Training data sets are used to develop machine learning models that can analyze new data and provide valuable insights. As a branch of data science, machine learning technology involves constructing and studying algorithms that learn from data and use that knowledge to make predictions by detecting patterns in the data. Using artificial intelligence (AI), machine learning empowers software applications to predict outcomes more accurately and automatically without being programmed to do so, while also enabling systems to learn and improve from experience.

Machine Learning and Data Cleaning

With advances such as automated data cleaning tools and no-code machine learning tools, it’s never been easier for businesses, large or small, to use data cleansing technology within their organization. Your machine learning project depends heavily on your interactive data cleaning approach, techniques, and methodology. As data scientists confirm: clean data leads to accurate results!

What Is Data Cleaning?

Data cleaning is a crucial step in the machine learning process. As a critical step, data cleaning ensures that the data you use to train machine learning models is accurate, consistent, and free of errors. The data cleaning process involves identifying and rectifying issues such as missing values, duplicate entries, and erroneous data, which are common in raw datasets. Using data cleaning tools and techniques, data scientists can transform dirty data into clean data, thereby improving the quality of the dataset and enhancing model accuracy. Interactive data cleaning enables dynamic engagement with the dataset, enabling data scientists to make real-time adjustments and corrections. This process is vital in a machine learning project, as the quality of input data directly impacts the performance of the machine learning model.

What is Clean Data?

Clean data is essential for effective model training, as it reduces the risk of incorporating bad data that can lead to inaccurate predictions. Data cleansing, also known as data scrubbing, involves several cleaning techniques, such as outlier detection and handling missing data. These techniques help in addressing data quality issues, ensuring that the dataset is reliable and suitable for analysis. Categorical variables may need to be standardized to avoid inconsistencies, and null values must be managed to prevent skewed results. The importance of data cleaning extends beyond just preparing data for machine learning models. It is a fundamental part of the data science workflow, affecting the overall success of a data science project. By maintaining high data quality, organizations can make better-informed decisions, leading to improved outcomes and efficiencies. Data cleaning is indispensable in the realm of machine learning and data science. It ensures that machine learning models are trained on quality data, leading to more accurate and reliable outcomes. As new data is continuously generated, ongoing data cleaning is necessary to maintain data integrity and support the evolving needs of machine learning applications.

The issues associated with getting your dataset “dirty” vary greatly depending on what kind of model or analysis you are running. Preventing these errors from occurring begins with good planning prior to using appropriate tools like validating input formats at the ingestion stage, producing reports that surface existing copies and invalid records.

Data Cleaning Initiatives

According to a Gartner survey of CIOs, data cleaning initiatives are among the top 10 priorities for AI investments. This isn’t surprising since poor data quality can lead to inaccurate results and missed opportunities. In fact, statistics show that businesses can lose over $3 trillion due to bad data every year.

Investing in proper data cleansing measures and technologies today might be expensive. But, for companies interested in reaping ROI from ML projects, there is no escaping its role as one needs clean datasets before feeding them onto predictive models. You need that step, so they work correctly & deliver meaningful information quickly and accurately throughout their respective processes.

According to recent statistics from Deloitte’s AI initiative, 63% of respondent companies with poor data could not realize any benefit or ROI from their analytics activities, owing to incomplete datasets and inconsistent formats.

Missing values are particularly problematic in ML applications because they cause models to use extreme assumptions when predicting future outcomes. This results in unreliable predictions that fail real-world tests for accuracy and precision.

How machine learning algorithms rely on clean, organized data

Machine learning algorithms are heavily reliant on the data that is input to them. With clean, organized datasets, these algorithms can be reliably and accurately used for predictive analysis or automated decision-making. According to a study by McKinsey & Company, only approximately 20% of data sets used in AI projects meet the quality standards necessary for reliable performance.

Data cleaning involves techniques such as data imputation, outlier detection and removal, duplicate record removal, and general consistency checks that help ensure any given dataset meets quality standards.

High-quality data can increase the accuracy of predictions and enable more informed decisions. However, low-quality datasets can lead to inaccurate estimations, missed opportunities, and even financial losses due to errors in models that typically rely on this type of data.

Data Cleaning Tools

Automated tools and services provide non-technical people with an efficient way to clean their datasets quickly and accurately without having to write any code at all – often resulting in far better insights than expected. Using automation and modern tools like these, organizations can unlock the full potential of machine learning with highly accurate results that support their business objectives in the future.

Properly addressing data quality issues is critical for any successful ML application as they directly affect the accuracy of its results. Recent studies have shown that up to 80% of a project’s time is spent cleaning and preparing datasets before analysis or model building begins. That demonstrates the importance of taking proactive steps toward improving data quality before beginning work with any ML project. Automating validation checks and auditing inputs and outputs will help ensure your datasets remain clean throughout the entire lifecycle. It will also help you save time on repetitive tasks so you can focus more on innovation instead.

Key advantages of preparing your dataset

Preparing your dataset before performing any analysis is paramount for efficiency. Clean and organized data can lead to more accurate, faster models since there is no need to spend time cleaning and organizing the data once it has been gathered.

Studies have shown that a well-prepared dataset can allow machine learning algorithms to train models up to three times faster than one with dirty data. This reduces the risk of errors due to wrong information in your dataset, meaning fewer costly mistakes.

Properly preparing your dataset also leads to improved accuracy in predictions from trained models, as dirty datasets may cause inaccurate results. It could be challenging to trace back the source of their origin, leading to poor decisions by a business or organization utilizing the model for its applications.

Recent reports suggest that companies investing in AI initiatives are seeing improvement in performance metrics by 10-20% due to properly prepared datasets, enabling more significant insights into different aspects of operations compared to those who don’t take these steps seriously.

Taking measures such as automated auditing processes and using modern-day solutions like automated tools and services can help ensure that you’re always working with optimal datasets throughout the life cycle of your project. This way, you’re able to ensure datasets are kept clean and organized without manually checking them every single time, saving time and resources. As organizations continue their journey towards digital transformation and leverage artificial intelligence technologies more frequently, data preparation will become increasingly important if they want successful outcomes from their projects.

How to clean your dataset

Start by understanding your project’s business context and objectives

Identify your project’s purpose and goals and the data to be used. This helps to ensure that you are working with clean datasets that meet your organization’s needs.

Identify what types of data (structured/unstructured) you need to work with

Once you understand what type of data needs to be cleaned, it’s time to start collecting and organizing it into usable formats for analysis or machine learning applications.

Inspect each dataset for potential missing values or outliers:

To ensure your dataset is consistent, look at each field for potential missing values or outliers that may skew results if not addressed beforehand.

Check for duplicates in your database

Having duplicate entries in your database can create many problems down the line when trying to conduct meaningful analysis or build models with ML algorithms. Ensure there are no duplicates before moving forward with further steps in the data-cleaning process.

Fill in any missing values

All missing values must be filled in with appropriate values or blanks if necessary to ensure accurate results when running analysis on a dataset. Depending on the type of data being worked with, this could involve imputing new information based on best guesses or removing them from consideration altogether, depending on the project’s context and complexity.

Remove any outliers if needed

An outlier is defined as an observation point that lies far outside other points in a given dataset. It can potentially lead to incorrect or misleading results if not removed from consideration during the preprocessing stage before feeding data into model training pipelines later on down the line during the development process. Outliers must be dealt with accordingly before introducing them into analytical procedures, so final results remain intact and reliable across the board regardless of the situation posed at hand.

Scale all features appropriately

Scaling features across datasets should be done according to the specific algorithm’s requirements. That means different approaches must be taken depending on the situation (i.e. normalizing ranges between 0-1 versus standardizing range between -1 +1 etc). This step goes hand-in-hand with feature engineering as well.

Handle categorical variables appropriately

Categorical variables need to be handled differently than numerical ones since they require special encoding techniques (i.e. one hot encoding versus label encoding).

Checking accuracy after implementing changes

After making adjustments, always double-check accuracy numbers, ensuring none has been negatively impacted.

Conclusion

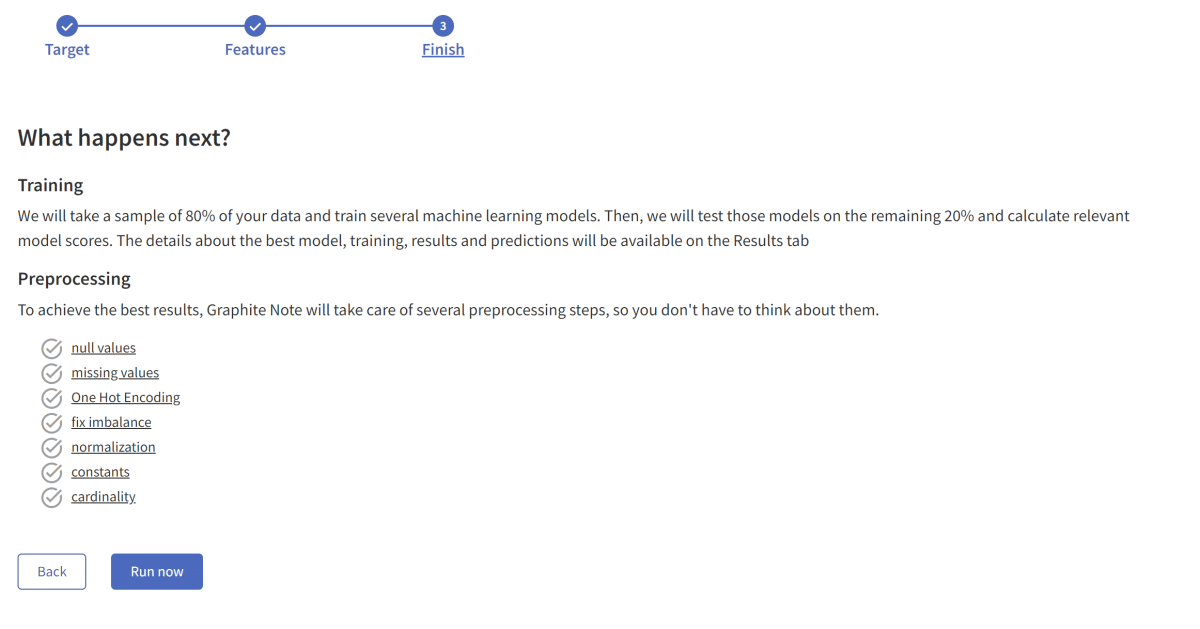

Data cleaning is essential to machine learning because it helps create reliable and accurate models. Poor data quality can cause various issues. That’s why data cleaning should be a priority for anyone who wants to build robust AI models and gain accuracy/speed gains. No-code tools and services have made it easier and faster to clean datasets without writing any code. No-code machine learning platforms like Graphite Note provide an array of benefits, as the platform takes care of data preprocessing, including filling in null values, identifying missing values, and eliminating collinearity. This allows users to quickly and accurately clean their datasets without writing any code. Graphite Note provides automated solutions for data cleaning, making it easier for non-technical professionals to get their datasets in order. The platform provides step-by-step guidance to help users understand the data cleaning process and apply it to their projects and machine learning models. Request a demo and let Graphite Note help you achieve maximum results in machine learning.

![A Comprehensive AI and Machine Learning Glossary of 100+ Key Terms [A-Z]](https://graphite-note.com/wp-content/uploads/2023/01/Blog-banners-2-1024x576.jpg)